In 1950, Alan Turing – one of the inventors of the modern computer, and fresh from helping win the war by designing it to decipher Germany’s indecipherable ‘Enigma Code’ – posited a test that he dubbed the ‘imitation game’, but was later named after him: Could a machine mimic a human so accurately, that no human judge could tell the difference? If so, would the machine be therefore considered ‘human’ in a functional sense?

The Turing Test has had any number of applications, from chess (IBM’s Deep Blue beating Kasparov in 1996), to Jeopardy! (Watson besting champ Ken Jennings n 2011). They also come up in fiction, a notable example, the 1982 film Blade Runner (based on a 1968 short story by Philip K. Dyck), wherein the ‘replicants’ (very real human androids) can only be distinguished from humans by experts, using detailed questionnaires and optometrist-level eye examinations.

The most recent iteration of advanced ‘artificial intelligence’ making the rounds is Chat-GPT (generative pre-trained transformer) an application that, as its name implies, can generate all sorts of content – sonnets, dissertations, Dr. Seuss poems, everyday conversations, encomiums in Old Scots – which is often very difficult to distinguish from anything a human might do, even an expert.

We should be clear that the machine is not thinking, nor even creating. It’s using human-generated content, and a whole lot of it – everything on the internet – and putting it together in algorithmic fashion, without a mind to guide (except those who made the Chat program in the first place). If it did not have all that treasure trove of a priori content, Chat-GPT would be quite limited; in fact, it could not produce much of anything. Were the only source of its ‘knowledge’ nursery rhymes, it would produce a small trove of decent nursery rhymes, and not much else. It’s not aware it’s doing anything, and in essence is just a vastly more complicated calculator.

That said, it’s got a lot more than rhymes in its trove, and as things now stand, it’s getting very difficult to tell the real from the fake, and this is developing into a very real problem cutting across boundaries, from Wall Street bankers, politicians to pop-stars. How are we now to tell which traders, talks, podcasts, reviews, articles are real, or not? Is what you are reading right now truly yours truly, or is this randomly-generated chat-app content, spurred by a question such as ‘what would jpm would write on a given day’? (Trust me, it’s me, at least I think it’s me, unless a Chat-bot has possessed my mind, like some Apollinarian heresy).

Teachers especially are getting anxious. How to grade essays or assignments anymore?

Some are hopeful, that good students – those in ‘faith based colleges’ – would never cheat, or could be taught not to, for such a self-defeating practice would fail them in the long run. The problem is that most students don’t think of the ‘long run’, which most of us don’t think of until we’re already well into the long run, and looking a long ways back. Also, even good people do desperate things in desperate situations – and when deadlines and five essays loom, well, with the easy temptation of such an app accessible on every smartphone, the devil inside may win the day.

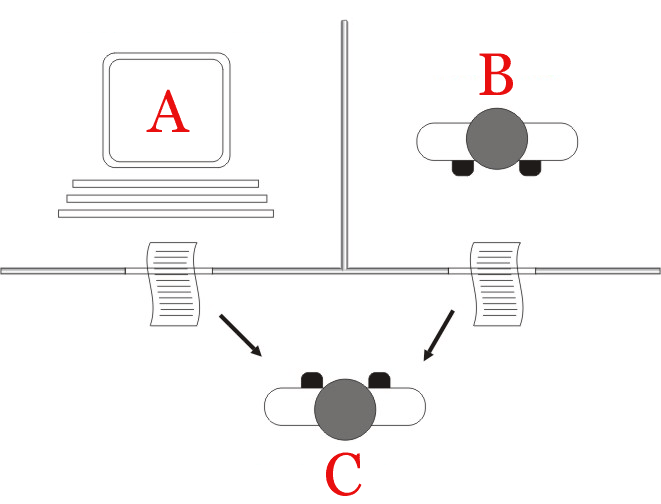

Other teachers want to embrace Chat-GPT, give it a big pedagogical hug, and guide students how to use it well (as in, never sweat writing anything on your own every again- which is sort where education seemed to be headed even before this). There is talk of a human-computer synthesis, a complementarity of the perfections of both, a prelude, perhaps, to the ‘singularity’, wherein man and machine blend into a transhumanist paragon of technological marvel. Of course, in reality, the machine would win the day. And if God wanted such a symbiosis, to my mind, He would have made us so.

Still others – and I sympathize – want to ban the technology altogether (but bonne chance with that, barring a cosmic-ray burst that fries the infrastructure of the internet and every smartphone on the planet).

Here are some thoughts: As our world falls off various cliffs (if that analogy be possible), life, to remain sane and balanced, must become more local, the very contrary of the enervating globalism, consumerism, socialism, collectivism and sheer artificiality that is suffocating us. By ‘local’ I mean gathering around those whom you love and trust (the two go together), who will support each other in virtue and striving for the good, not least on the pilgrimage to heaven. In such an environment, technologies such as Chat-GPT will be less of a problem, if a problem at all.

Most of the great books are already written – there may still be a few left to write before the Parousia, but they may have to be penned with ink on paper. The same for student essays. I wrote all mine in longhand back in the day. It’s good practice, and gets the mental juices flowing, as Bertie Wooster might say. If a student does produce a superlative essay, and its provenance is unknown, the teacher simply has to ask the student about what he wrote, like the tutors in Oxford did – and perhaps still do – with their pupils in post-essay discussions. If said student knows and can recall nothing, well, then, we have our ‘replicant’. This can also be done with on-line learning (which has its place, but more on that, perhaps, later).

As far as all the new material on the internet, well, what of it? I enjoy reading articles and people’s thoughts, but, again, often on sites that I trust – even if I do delve now and again into those I don’t, to gain a broader perspective. But much of what is said has been said before, and better. We could all do with a bit of ressourcement – delving back to the sources.

And, for all you teachers and other evaluators, keep in mind that A.I. is not fool-proof. In fact, it’s incredibly stupid – the very definition thereof, as in ‘being unaware of what one is doing’, with the same root as ‘stupor’. Hence, it makes glaring errors. We may have to become like those art experts who can detect frauds who are very good at copying the masters: No one’s perfect, and there are always tell-tale signs. It will still be possible to detect a chat with a human from a chatty machine, for a long time yet, even unto that aforementioned Parousia.

Only then will we all face the only final exam before the Truth Himself, where faking and cheating are impossible.

In the meantime, stay real, and stay true.