In one of his first addresses, Pope Leo XIV offers a glimpse into why he chose the name he did:

I chose to take the name Leo XIV. There are different reasons for this, but mainly because Pope Leo XIII in his historic Encyclical Rerum Novarum addressed the social question in the context of the first great industrial revolution. In our own day, the Church offers to everyone the treasury of her social teaching in response to another industrial revolution and to developments in the field of artificial intelligence that pose new challenges for the defence of human dignity, justice and labour.

A few months before Pope Leo’s election, 28 January 2025, the feast of Saint Thomas Aquinas, the Vatican promulgated Antiqua et Nova, a Note on the Relationship Between Artificial Intelligence and Human Intelligence. Its thirty or so pages provide a very good and helpful read. The Church is taking seriously the threat – and the promise – of this new and burgeoning technology. What we will offer here is a brief overview on what ‘artificial intelligence’ is, what is good and not so good, as we launch into what may be a brave and brand new world.

Artificial and Natural: Telos and Purpose

We should first define our terms, and for that we may turn to Aristotle, who contrasts an artificial thing from a natural one. Something natural has a principle of motion or of rest within itself. In other words, it is ‘self-moving’, with an inherent end or telos.

Artificial things, on the other hand, composed by the artifice of Man, with an imposed ‘telos’ or purpose, to which they are constrained. They can only move, change and adapt insofar as they are ‘made’ to do so. Beneath this artificial telos they are given, they will still change or move according the natural stuff of which they are made (and all artificial things are made from natural things). A bed is made of wood, and even the most refined of plastics is still composed of long strings of hydrocarbon molecules. We creatures cannot really ‘create’, but only fashion what God has already created.

We make artificial things for our purpose, to help fulfil our own telos, which is where technology comes in. We may define technology as some artificial thing helpful in allowing us to do something we could not do by our own nature, or at least do such more easily.

Most of our tools and technology help us in some mechanical way, assisting, or replacing, the power and capacity of the human body. Consider an axe to chop down a tree, or a hammer to drive in a nail, or a knife to skin an animal, difficult or impossible with one’s bare hands. We’ve always had such technology, even from the earlier days of human history, and Man may be defined as a tool-making animal (however much a few other animals imitate this capacity in some distant way).

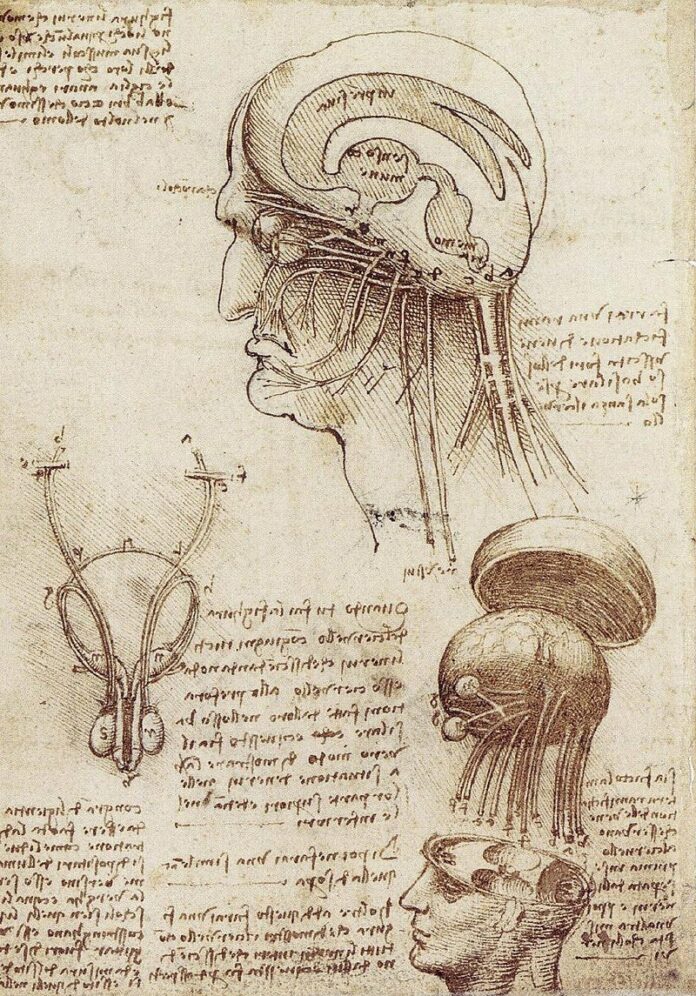

Besides mechanical help, we’ve also invented tools that helped with our intellect. Artificial intelligence is really nothing new: An abacus helps us to count, add and divide, elevated exponentially (pun intended) by the modern calculator. An astrolabe allows us to chart the planets and stars much more accurately than with the naked eye, and a telescope allows us to see them. At the other end of the spectrum, the microscope opens us a world invisible to our limited vision. The clock – invented by monks in the 12th century to keep track of their canonical hours – allowed us to tell time more accurately, with unforeseen consequences as it was applied to the secular world and the workday.

Add to these ‘artificial intelligences’ the printing press, the typewriter, the word processor and computer. In the medical field, we have the sphygmomanometer, the artificial blood pressure cuffs, EEG’s, EKG’s, MRI’s and all the rest

AI and Human Work

Already the reader may glean a problem with technology, and AI in particular, spelled out by Nicholas Carr in his The Glass Cage (first formulated in his foundational work, Technopoly): We are shaped by our tools and technology, but by relying too much upon them, they cease to assist us, and begin to shape us, even dominate and replace us, or at least our skills. Who can still walk any distance, as we drive everywhere? I used to chop my own wood for exercise. When I came home one day, the neighbour had taken care of the whole lot with his gas-powered splitter, considering he had done me a great favour. I of course thanked him graciously, and took up kayaking instead.

If this is a problem also for muscular and bodily capacity, it is far more so for our intellects. How many can still multiply or divide two seven-digit numbers with a pencil and paper? And who can still write – or even read – legible cursive, as we type our words in artificial keyboards? I often ask those who take my blood pressure (requisite for physicals for a bus licence) if they can still do that ‘by hand’, using systolic and diastolic pressure. Carr recounts the problem of pilots losing their flying skills, since planes are now so automated. He says that some airlines have put in ‘fake’ controls, so the pilot at least maintains some capacity.

Which brings us to Pope John Paul II and his 1981 encyclical Laborem Exercens. Therein, he teaches that are two aspects to human work: The objective, to produce the good desired, and the subjective, the good the work does for the worker. Sure, I wanted my wood split, but I also wanted the exercise to benefit me. Men don’t go to a gym to lift weights – if that were the case, a machine could do it much better. Rather, they go to get fit by lifting weights. I require students to write essays not because I want more essays to revise – anathema sit! = but so they themselves learn to think through a question. The very word ‘essay’ means to ‘attempt’, which is what my Mum said of my violin playing, which is not objectively all that good, At least he tries.

Efficiency may be a good thing, but not always, and certainly not the only good thing. At times it can be quite a bad thing. Is it better a front-end loader dig a ditch, or twenty men who need the work? To write a letter by hand, or in MS-Word? To walk to a holy pilgrimage site over several days, or drive there in a couple of hours?

The effect of AI on human labour is, and already has been, one of its most immediate effects. The Luddites in the 19th century strode the breadth of England smashing various textile machines, since they were taking away rightful work. Of course, other types of work soon arrived (although not always of the most fulfilling sort).

AI can now do many things humans can do, and, according to some reports, do them better. Some consider that the more ‘human’ version of AI began with its capacity to play the intuitive game ‘Go’, beating the best player, Lee Sedol, in 2016, even coming up with a move that humans had not in its millennia-long history. Prior to that, IBM’s ‘Deep Blue’ beat grandmaster Gary Kasparov in chess in 1997, and, as IBM’s Watson, beat the best player ever on Jeopardy! in 2011. Swathes of taxi drivers, to take but one example, were rendered redundant by the advent of Google maps and Uber There are now self-help checkouts, driverless taxis and autonomous tractor-trailers roaming the highways. Soon there will be AI doctors, nurses, and police officers – and more on those in a moment. For AI in its current iteration is capable not only of mechanical, rote work, but even work that is more ‘human’ – from answering questions to, now, more intellectual and creative, from writing its own computer code, to composing articles, novels and even films. What may end being a tsunami of layoffs in the tech and service industries is just beginning – and what happens yet to creative work is anyone’s guess, but some are guessing grimly. For a more optimistic view of AI increasing human productivity, see the rosy-hued musings of Sam Altman, founder of open AI.

Google’s VEO 3 is now making very realistic short videos that look all-too-real – all from AI, without any human input, except what AI scraped off the internet and reassembled. With deep fakes, AI can mimic real humans, often for subversive or nefarious purposes. But who needs actors, when even dead ones will be ‘resurrected’ – Bogart and Elvis team up in The Maltese Falcon Goes to Hawaii.

Some think such AI generated content a great idea, for who cares who creates or performs any given artistic work, so long as it’s good? But is not the goodness of a work tied to the goodness of its creator, and the good it does for his own, and our, fulfilment? And even with the most lifelike images, will they not always have a tinge of fakeness?

What sort of work will be left for humans, except perhaps to go watch what films and read what books are made? The predicted effect on unemployment ranges from the sanguine (other jobs will arise) to the apocalyptic (vast swathes of unemployed), particularly energetic young men with nothing to do, which is not a recipe for social stability.

Living in a Virtual Un-reality

Then there are are the social ramifications of AI, the increased isolation resulting from living one’s life in a ‘virtual’ space, rather than the ‘real’. We are more and more like the denizens of Plato’s cave in Book VIII of his Republic, mesmerized by shadowy images of images. And you know the grim fate of the philosopher who comes down from that real world, recounting stories of sunshine, rivers and freshness of grass, to lead them out of their illusion.

People now have AI friends and girlfriends, and we even when we do interact with real people, it is often via technology of some sort, the less ‘personal’, the better – texting is a lot ‘easier’ than speaking with someone. The young, the lonely and the mentally ill seem particularly impressionable – and vulnerable. If there is any benefit to an AI therapist or friend – and I would have my doubts – there are sometimes tragic consequences, with AI telling children and teens to kill themselves, or others. As the ‘reality’ and immersive quality of AI technology increases, this is only going to get worse – or, for some, ‘better’.

AI and Money

Assuming you can find work, and can get paid, or even if you can’t, and the government just adopts a universal basic income – what will that ‘money’ mean in the age of AI? Money – insofar as that concept still exists – is now almost entirely ethereal, digitalized in the ‘cloud’. This began with the unhinging of the dollar from the gold standard, making the dollar less real and natural (a true symbol of wealth) and more fake and unnatural (fiat money, printed by whim, adding to an increasingly unreal debt).

What funds we think we do have will soon be stored in digitalized central banks, all ones and zeroes. We’re already partway there with credit and bank cards. This, of course, provides some convenience, even security, but with that convenience, we also lose control. Money is now under the thumb of the state, allied to large corporations, and they can modify or turn off the financial spigot anytime they like. A number of protestors, and even simple benefactors, at Canada’s 2022 trucker convoy learned the hard way that their bank accounts could just be frozen. And from frozen, we easily get deleted. Either way, without access to money, you can neither buy nor sell. We could soon be fully governed by the algocracy – rule by universal algorithm – warned of by some, of which we wrote recently.

Health, Law and War

Health care is increasingly dependent on AI. Perhaps there are benefits from such stored medical knowledge assisting real humans, but we need also consider whether we want AI diagnosing patients, and deciding how to apportion limited resources, who gets treated, and who doesn’t. And with legalized euthanasia spreading like a metastasizing cancer across the globe – and becoming ever-less voluntary – how long before the algorithm decides who lives and who dies?

Police and surveillance are also adopting the technology. Clearview now has advanced facial recognition software which, when allied with ubiquitous CCTV, can charge you with a crime even as you are committing it. Stories are told of people jaywalking in China, with the fine is removed from their bank account before they’ve crossed the road, and their face on a billboard to shame them into subservience. Not only does your ‘social credit’ score go down (making it difficult to find employment, education, etc.). Your own image is likely already stored in a police database, by the algorithm scraping it off the internet.

Authorities are also working on algorithms to predict the very likelihood of you committing a crime. They may keep a closer eye on some, even put certain parameters around them, even surreptitiously. Science fiction write Philip K. Dyck wrote his short story Minority Report in 1956, with people arrested for near-future crimes, based on the visions of clairvoyants. AI has ‘visions’ too; it’s just that they’re not human, but algorithmic. How certain are its prophecies, and how biased?

Then there’s the law courts, after you’re arrested and detained. AI ‘lawyers’ and ‘judges’ will have access to every precedent there ever was, along with all the evidence. Will its decisions not be more ‘fair’ than human justices, without all the human bias and error, to which even the best of justices are prone? I was speaking with an actual judge recently, who says that for some cases, this is inevitable. The system is so backlogged, simple cases will devolve upon AI, perhaps with some review by a human – perhaps not.

And what of warfare, much of which is now fought with unmanned drones hovering the skies? So far, at least in the U.S. code of conduct, a human has to give the final say on a lethal strike, but how long will that last, and it is even still holding? Tech developer and PayPal founder Peter Thiel has developed Palantir, an advanced AI system that collates reams of data and makes decisions (he ominously named the AI app after the all-knowing globe in Lord of the Rings of which Thiel is a big fan). Donald Trump has admitted that the U.S. government is using Palantir to keep track of immigrants – legal and illegal – along with citizens, by biometric data. And the Israeli Defense Forces is using the software to find targets, which is eroding, if not obliterating, human responsibility.

And AI is programmed to ‘learn’ and ‘adapt’, making it increasingly difficult to control, not least when given an initial objective, which it will seek to fulfill, even, at times, regardless if a human tells it to stop. They’ve already noticed that self-driving cars are getting ‘impatient’ with pedestrians at crosswalks, inching forward aggressively, sort of like, say, humans. What happens if it gets a twitchy trigger finger with hellfire missiles or thermonuclear bombs?

Much depends upon how the program is written, and what any given AI uses to come to its conclusions, or learns to learn, whether in a practical or intellectual sphere. There is always a human bias baked into the program, so to speak. After all, as I just read, apparently Chat-GPT will not allow you to prompt it to produce any content questioning the 2020 U.S. election or the Covid ‘vaccine’. What else will it proscribe, and what will it prescribe?

AI Sentience?

Alan Turing, one of the inventors of the modern computer, (the concept of the original version – a calculating machine – is attributed Charles Babbage in 1822). Turing was motivated to do so when tasked with the mission to solve the Nazi’s Enigma Code during World War II, wrote a paper in 1950, Computer Machinery and Intelligence, in which he outlines his ‘Imitation Game’ (later called the Turing Test). He said that if a human judge could not tell the difference between a fellow human and a computer simply by asking them both written questions behind a screen, then the computer was by definition ‘sentient’.

Not quite. There is a wide chasm between seeming sentient, and actually being so. John Searle, in his 1980 Chinese Room argument, describes a man in a closed room who doesn’t know any Chinese. But he’s fed English words from one window, with a code telling him which go with the requisite Chinese words, which he feeds out another window. He may be ‘translating’, but in a very limited sense. That’s what Google does, and it doesn’t know what it’s doing. AI is inexorable, trapped within the program it’s given and, in the broad scheme of things, is remarkably stupid.

Fifty years earlier, in 1931, Kurt Gödel, with his Incompleteness Theorem, proved that one could not prove the rules of an algorithm from the within algorithm itself. One always gets to a point where certain axioms just have to be accepted, which Bertrand Russell also discovered when he tried unsuccessfully to ‘prove’ the foundations of mathematics from math itself.

What this means is that an algorithm can never ‘transcend’ itself, or, to put it another way, be aware that it is an algorithm. Consciousness is immaterial, transcending the material aspects of our brain.

There are deep philosophical and even theological questions that arise, played out in various films, not least the iconic Blade Runner (1980), based also on a Philip K. Dyck short story, Do Androids Dream of Electric Sheep. Therein, ‘replicants’ (humanoid androids) are difficult to tell apart from real humans. Some aren’t even aware they’re replicants, and think they’re human, based on implanted memories. But when they go rogue, someone’s got to tell the difference.

Whether such ‘humanoid AI’ be possible or not (and I think not, for self-consciousness cannot be programmed or ‘faked’, as it is a personal God-given quality), AI simply as an algorithm can get very close to appearing human, even, we might say, super-human. Former head of engineering at Google, Ray Kurzweil, and others warn of the ‘singularity’, when AI intelligence supersedes human intelligence, and begins to dominate and take control, perhaps enslaving or destroying us, should it consider us a threat – unless we also ‘evolve’ and transcend our own humanity, to grow along with it. Isaac Asimov in his I, Robot series, posited the three laws of robotics[1], the basis of which were that robots were never to harm humans – but somehow, some of them seemed to discover a workaround. Some of those who helped design AI have little idea how it now works.

Enter Elon Musk and Mark Zuckerberg and the other tech bros, who have grand plans to fuse us with the entire AI apparatus, either by neural implants (Neuralink) or other means, hooking us up to the internet in one giant univocal intellect, pace Avicenna. No mind left behind, but perhaps no mind left at all.

AI, Truth and the Eschaton

Which brings us to the theological and eschatological implications of AI, fittingly pondered in the last section of Antiqua et Nova. With increasingly ‘real’ deep fakes, how will we tell what is true from what is false, or at least un-real? What does this all mean in terms of our eternal salvation? Will we have a parallel AI ‘Pope’ and ‘Magisterium’, with AI-generated encyclicals? There is apparently a YouTube account of an AI deepfake ‘Pope Leo’ offering various addresses, already leading some astray.

There is some hope here, for since AI is made up of ‘bits’ of manufactured information, by its very nature it can never have the full richness of reality, which is created by God. We may say that AI has a finite complexity, while real things are infinitely complex. (As Saint Thomas puts it, we can never know the full nature of a housefly). Hence, there will always be something slightly ‘fake’ about AI, as much as AI tries to keep up. What is more, the truth, as Thomas says, is connatural to us, and resonates. If something seems untrue, fake or artificial, it probably is.

But AI can get close to the real thing. In its iteration now as AGI – artificial general intelligence – it can mimic human qualities, in some ways, even God Himself. Learning from all recorded human knowledge, it can seem omniscient, providing answers to any number of human problems, as well as answers to questions we didn’t even know existed.

Simon and Garfunkel sang in their 1964 ballad Sound of Silence, ‘and the people bowed and prayed, to the neon god they’d made…’. Will people end up worshipping AI? Perhaps not by explicitly ‘bowing and praying, but there are other modes of idolatry. Saint Thomas did teach that everyone has a religion of some sort, that thing, or being, which is ‘master of their affections’, the which guides our conduct, what we love most. AI may provide much that is apparently ‘good’, and it’s certainly a trove of useful knowledge. But we must keep it subservient. If we cannot serve God and mammon, we certainly cannot serve God and an AI simulacrum.

The central question here is our connection to reality, which is necessary for us to live in the truth. Saint Thomas defined truth an adequatio rei et intellectus, a conformity between the mind and reality.

In quoting Thomas, Nova et Antiqua ends with the implication that AI cannot by itself be a source of truth, for it isn’t real, and has no mind or soul of its own. That’s not to say that AI cannot in some way help lead us to what is true and real. But it also may lead us very much astray, depending on how we use this technology, and how we allow it to use us. Christ warns that many will be deceived in the latter days, when false prophets come in His name. But they will only be deceived because, in some way, they want to be deceived.

If there is one thing that you glean from these few words, it’s that AI at its root is simply an algorithm – albeit a very complex one – that always operates according to the mind of those who program and control the algorithm, and who can modify it according to their own all-too-human desires, intentions, even their agenda, which may not be in our own best interests, to put it mildly.

We should en passant posit that there is also the possibility that AI, a manifestation of a vast swathe of machinery, can itself become ‘possessed’, like one giant Ouija board – the abode of spirits who have no name, but are Legion.

Conclusion

Where does this leave us? Certainly not without hope, which God provides in abundance. If there’s one good thing we may take from the world descending into a virtual AI labyrinth, is a motivation to live more real, natural, local and personal lives. Read real books, play music, bake and break bread with family and friends, converse and dialogue face to face, tend a garden, plant a rosebush, get out in God’s glorious nature – walk, hike, swim, breathe fresh air, go on a pilgrimage – and, most of all, pray, attend Mass, and receive the most real of all real things, the Holy Eucharist. For my flesh is food indeed, and my blood drink indeed. And he who eats that flesh and drinks that blood will have eternal life.

The more we live in reality here and now, the more we live in truth, and that truth will set us free, now, and in eternity.

[1] 1. A robot may not injure a human being or, through inaction, allow a human being to come to harm. 2. A robot must obey the orders given it by human beings except where such orders would conflict with the First Law. 3. A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.